Hadoop on windows

Author: Z | 2025-04-23

RDBMS vs Hadoop; Hadoop Architecture; Hadoop 2.x vs Hadoop 3.x; Hadoop – Ecosystem; Installation and Environment Setup. How to Install Hadoop in Linux? Installing and Setting Up Hadoop in Windows 10; Installing Single Node Cluster Hadoop on Windows; Configuring Eclipse with Apache Hadoop; Components of Hadoop. Hadoop Distributed File

gptshubham595/hadoop-windows: Hadoop installation in Windows

August 30, 2016 3 minute read My latest Pluralsight course is out now: Hadoop for .NET DevelopersIt takes you through running Hadoop on Windows and using .NET to write MapReduce queries - proving that you can do Big Data on the Microsoft stack.The course has five modules, starting with the architecture of Hadoop and working through a proof-of-concept approach, evaluating different options for running Hadoop and integrating it with .NET.1. Introducing HadoopHadoop is the core technology in Big Data problems - it provides scalable, reliable storage for huge quantities of data, and scalable, reliable compute for querying that data. To start the course I cover HDFS and YARN - how they work and how they work together. I use a 600MB public dataset (from the 2011 UK census), upload it to HDFS and demonstrate a simple Java MapReduce query. Unlike my other Pluralsight course, Real World Big Data in Azure, there are word counts in this course - to focus on the technology, I keep the queries simple for this one.2. Running Hadoop on WindowsHadoop is a Java technology, so you can run it on any system with a compatible JVM. You don’t need to run Hadoop from the JAR files though, there are packaged options which make it easy to run Hadoop on Windows. I cover four options: Hadoop in Docker - using my Hadoop with .NET Core Docker image to run a Dockerized Hadoop cluster; Hortonworks Data Platform, a packaged Hadoop distribution which is available for Linux and Windows; Syncfusion’s Big Data Platform, a new Windows-only Hadoop distribution which has a friendly UI; Azure HDInsight, Microsoft’s managed Hadoop platform in the cloud. If you’re starting out with Hadoop, the Big Data Platform is a great place to start - it’s a simple two-click install, and it comes with lots of sample code.3. Working with Hadoop in .NETJava is the native programming language for MapReduce queries, but Hadoop provides integration for any language with the Hadoop Streaming API. I walk through building a MapReduce program with the full .NET Framework, then using .NET Core, and compare those options with Microsoft’s Hadoop SDK for .NET (spoiler: the SDK is a nice framework, but hasn’t seen much activity for a while). Using .NET Core for MapReduce jobs gives you the option to write queries in C# and run them on Linux or Windows clusters, as I blogged about in Hadoop and .NET Core - A Match Made in Docker.4. Querying Data with MapReduceBasic MapReduce jobs are easy with .NET and .NET Core, but in this module we look at more advanced functionality and see how to write performant, reliable .NET MapReduce jobs. In this module I extend the .NET queries to

Release win-hadoop win-hadoop 2.6.0 - hadoop for windows

Use: combiners; multiple reducers; the distributed cache; counters and logging. You can run Hadoop on Windows and use .NET for queries, and still make use of high-level Hadoop functionality to tune your queries.5. Navigating the Hadoop EcosystemHadoop is a foundational technology, and querying with MapReduce gives you a lot of power - but it’s a technical approach which needs custom-built components. A whole ecosystem has emerged to take advantage of the core Hadoop foundations of storage and compute, but make accessing the data faster and easier. In the final module, I look at some of the major technologies in the ecosystem and see how they work with Hadoop, and with each other, and with .NET: Hive - querying data in Hadoop with a SQL-like language; HBase - a real-time Big Data NoSQL database which uses Hadoop for storage; Spark - a compute engine which uses Hadoop, but caches data in-memory to provide fast data access. If the larger ecosystem interests you, I go into more depth with a couple of free eBooks: Hive Succinctly and HBase Succinctly, and I also cover them in detail on Azure in my Pluralsight course HDInsight Deep Dive: Storm, HBase and Hive.The goal of Hadoop for .NET Developers is to give you a thorough grounding in Hadoop, so you can run your own PoC using the approach in the course, to evaluate Hadoop with .NET for your own datasets.How-to-install-Hadoop-on-Windows/winutils/hadoop

That all users must take into account is the manufacturing cost. Hadoop is hardware that requires at least one server room, which does not only equal high electricity costs. It also means Hadoop users must spend a lot of money updating and fixing the machines. All in all, Hadoop requires a lot of money to run properly. Working in real-timeOne major limitation of Hadoop is its lack of real-time responses. That applies to both operational support and data processing. If a Hadoop user needs assistance with operating the Hadoop software on their server room machines, that assistance will not be provided to them in real time.They have to wait for a response, which can impact their work. Similarly, if a Hadoop user needs to analyze some data to make a data-driven decision quickly, they can’t. In Hadoop, there is no data processing in real time. That can pose a challenge in high-paced environments where decisions need to be made without much notice.ScalingHadoop can also be challenging to scale. Because Hadoop is a monolithic technology, organizations will often be stuck with the version of Hadoop they started out with. Even when they grow and deal with larger amounts of data. If they want an upgraded version of Hadoop, they have to replace their entire setup, which is expensive.They either have to replace their entire setup or decide to run a new version of Hadoop on an older machine, which requires more computing power as well as the business to maintain these. RDBMS vs Hadoop; Hadoop Architecture; Hadoop 2.x vs Hadoop 3.x; Hadoop – Ecosystem; Installation and Environment Setup. How to Install Hadoop in Linux? Installing and Setting Up Hadoop in Windows 10; Installing Single Node Cluster Hadoop on Windows; Configuring Eclipse with Apache Hadoop; Components of Hadoop. Hadoop Distributed File In Hadoop for Windows Succinctly, Author Dave Vickers provides a thorough guide to using Hadoop directly on Windows operating systems. Book Description. Topics included: Installing Hadoop for Windows Enterprise Hadoop for Windows Programming Enterprise Hadoop in Windows Hadoop Integration and Business Intelligence (BI) Tools in WindowsHadoop on Windows: How to Browse the Hadoop Filesystem?

Group: Apache HadoopApache Hadoop CommonLast Release on Oct 18, 2024Apache Hadoop Client aggregation pom with dependencies exposedLast Release on Oct 18, 2024Apache Hadoop HDFSLast Release on Oct 18, 2024Apache Hadoop MapReduce CoreLast Release on Oct 18, 2024Hadoop CoreLast Release on Jul 24, 2013Apache Hadoop AnnotationsLast Release on Oct 18, 2024Apache Hadoop Auth - Java HTTP SPNEGOLast Release on Oct 18, 2024Apache Hadoop Mini-ClusterLast Release on Oct 18, 2024Apache Hadoop YARN APILast Release on Oct 18, 2024Apache Hadoop MapReduce JobClientLast Release on Oct 18, 2024Apache Hadoop YARN CommonLast Release on Oct 18, 2024Apache Hadoop MapReduce CommonLast Release on Oct 18, 2024Apache Hadoop YARN ClientLast Release on Oct 18, 2024This module contains code to support integration with Amazon Web Services.It also declares the dependencies needed to work with AWS services.Last Release on Oct 18, 2024Apache Hadoop HDFS ClientLast Release on Oct 18, 2024Apache Hadoop MapReduce AppLast Release on Oct 18, 2024Apache Hadoop YARN Server TestsLast Release on Oct 18, 2024Apache Hadoop MapReduce ShuffleLast Release on Oct 18, 2024Hadoop TestLast Release on Jul 24, 2013Apache Hadoop YARN Server CommonLast Release on Oct 18, 2024Prev12345678910NextInstall Hadoop 3.3.2 in WSL on Windows - Hadoop

Hadoop is a distributed computing framework for processing and storing massive datasets. It runs on Ubuntu and offers scalable data storage and parallel processing capabilities.Installing Hadoop enables you to efficiently handle big data challenges and extract valuable insights from your data.To Install Hadoop on Ubuntu, the below steps are required:Install Java.Create a User.Download Hadoop.Configure Environment.Configure Hadoop.Start Hadoop.Access Web Interface.Prerequisites to Install Hadoop on UbuntuComplete Steps to Install Hadoop on UbuntuStep 1: Install Java Development Kit (JDK)Step 2: Create a dedicated user for Hadoop & Configure SSHStep 3: Download the latest stable releaseStep 4: Configure Hadoop Environment VariablesStep 5: Configure Hadoop Environment VariablesStep 6: Start the Hadoop ClusterStep 7: Open the web interfaceWhat is Hadoop and Why Install it on Linux Ubuntu?What are the best Features and Advantages of Hadoop on Ubuntu?What to do after Installing Hadoop on Ubuntu?How to Monitor the Performance of the Hadoop Cluster?Why Hadoop Services are Not starting on Ubuntu?How to Troubleshoot issues with HDFS?Why My MapReduce jobs are failing?ConclusionPrerequisites to Install Hadoop on UbuntuBefore installing Hadoop on Ubuntu, make sure your system is meeting below specifications:A Linux VPS running Ubuntu.A non-root user with sudo privileges.Access to Terminal/Command line.Complete Steps to Install Hadoop on UbuntuOnce you provided the above required options for Hadoop installation Ubuntu including buying Linux VPS, you are ready to follow the steps of this guide.In the end, you will be able to leverage its capabilities to efficiently manage and analyze large datasets.Step 1: Install Java Development Kit (JDK)Since Hadoop requires Java to run, use the following command to install the default JDK and JRE:sudo apt install default-jdk default-jre -yThen, run the command below to Verify the installation by checking the Java version:java -versionOutput:java version "11.0.16" 2021-08-09 LTSOpenJDK 64-Bit Server VM (build 11.0.16+8-Ubuntu-0ubuntu0.22.04.1)As you see, if Java is installed, you’ll see the version information.Step 2: Create a dedicated user for Hadoop & Configure SSHTo create a new user, run the command below and create the Hadoop user:sudo adduser hadoopTo add the user to the sudo group, type:sudo usermod -aG sudo hadoopRun the command below to switch to the Hadoop user:sudo su - hadoopTo install OpenSSH server and client, run:sudo apt install openssh-server openssh-client -yThen, generate SSH keys by running the following command:ssh-keygen -t rsaNotes:Press Enter to save the key to the default location.You can optionally set a passphrase for added security.Now, you can add the public key to authorized_keys:cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keysTo set permissionsInstall Hadoop 3.2.0 on Windows 10 using Windows - Hadoop

On the authorized_keys file, run:sudo chmod 640 ~/.ssh/authorized_keysFinally, you are ready to test SSH configuration:ssh localhostNotes:If you didn’t set a passphrase, you should be logged in automatically.If you set a passphrase, you’ll be prompted to enter it.Step 3: Download the latest stable releaseTo download Apache Hadoop, visit the Apache Hadoop download page. Find the latest stable release (e.g., 3.3.4) and copy the download link.Also, you can download the release using wget command:wget extract the downloaded file:tar -xvzf hadoop-3.3.4.tar.gzTo move the extracted directory, run:sudo mv hadoop-3.3.4 /usr/local/hadoopUse the command below to create a directory for logs:sudo mkdir /usr/local/hadoop/logsNow, you need to change ownership of the Hadoop directory. So, use:sudo chown -R hadoop:hadoop /usr/local/hadoopStep 4: Configure Hadoop Environment VariablesEdit the .bashrc file using the command below:sudo nano ~/.bashrcAdd environment variables to the end of the file by running the following command:export HADOOP_HOME=/usr/local/hadoopexport HADOOP_INSTALL=$HADOOP_HOMEexport HADOOP_MAPRED_HOME=$HADOOP_HOMEexport HADOOP_COMMON_HOME=$HADOOP_HOMEexport HADOOP_HDFS_HOME=$HADOOP_HOMEexport YARN_HOME=$HADOOP_HOMEexport HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/nativeexport PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/binexport HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"To save changes and source the .bashrc file, type:source ~/.bashrcWhen you are finished, you are ready for Ubuntu Hadoop setup.Step 5: Configure Hadoop Environment VariablesFirst, edit the hadoop-env.sh file by running the command below:sudo nano $HADOOP_HOME/etc/hadoop/hadoop-env.shNow, you must add the path to Java. If you haven’t already added the JAVA_HOME variable in your .bashrc file, include it here:export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64export HADOOP_CLASSPATH+=" $HADOOP_HOME/lib/*.jar"Save changes and exit when you are done.Then, change your current working directory to /usr/local/hadoop/lib:cd /usr/local/hadoop/libThe below command lets you download the javax activation file:sudo wget you are finished, you can check the Hadoop version:hadoop versionIf you have passed the steps correctly, you can now configure Hadoop Core Site. To edit the core-site.xml file, run:sudo nano $HADOOP_HOME/etc/hadoop/core-site.xmlAdd the default filesystem URI: fs.default.name hdfs://0.0.0.0:9000 The default file system URI Save changes and exit.Use the following command to create directories for NameNode and DataNode:sudo mkdir -p /home/hadoop/hdfs/{namenode,datanode}Then, change ownership of the directories:sudo chown -R hadoop:hadoop /home/hadoop/hdfsTo change the ownership of the created directory to the hadoop user:sudo chown -R hadoop:hadoop /home/hadoop/hdfsTo edit the hdfs-site.xml file, first run:sudo nano $HADOOP_HOME/etc/hadoop/hdfs-site.xmlThen, paste the following line to set the replication factor: dfs.replication 1 Save changes and exit.At this point, you can configure MapReduce. Run the command below to edit the mapred-site.xml file:sudo nano $HADOOP_HOME/etc/hadoop/mapred-site.xmlTo set the MapReduce framework, paste the following line: mapreduce.framework.name yarn Save changes and exit.To configure YARN, run the command below and edit the yarn-site.xml file:sudo nano $HADOOP_HOME/etc/hadoop/yarn-site.xmlPaste the following to enable the MapReduce shuffle service: yarn.nodemanager.aux-services mapreduce_shuffle Save changes and exit.Format the NameNode byHadoop Installation on Windows and

Running the following command:hdfs namenode -formatThis initializes the Hadoop Distributed File System (HDFS).Step 6: Start the Hadoop ClusterRun the command below to start the NameNode and DataNode:start-dfs.shTo start the ResourceManager and NodeManager, run:start-yarn.shCheck running processes by running the command below:jpsYou should see processes like NameNode, DataNode, ResourceManager, and NodeManager running.If all is correct, you are ready to access the Hadoop Web Interface.Step 7: Open the web interfaceWhile you know your IP, navigate to in your web browser: should see the Hadoop web interface.To access the DataNodes, use the URL to view the below screen:Also, you can use the URL to access the YARN Resource Manager as you see below:The Resource Manager is an indispensable tool for monitoring all the running processes within your Hadoop cluster.What is Hadoop and Why Install it on Linux Ubuntu?Hadoop is a distributed computing framework designed to process and store massive amounts of data efficiently.It runs on various operating systems, including Ubuntu, and offers scalable data storage and parallel processing capabilities.Installing Hadoop on Ubuntu empowers you to handle big data challenges, extract valuable insights, and perform complex data analysis tasks that would be impractical on a single machine.What are the best Features and Advantages of Hadoop on Ubuntu?Scalability: Easily scale Hadoop clusters to handle growing data volumes by adding more nodes.Fault Tolerance: Data is replicated across multiple nodes, ensuring data durability and availability.Parallel Processing: Hadoop distributes data processing tasks across multiple nodes, accelerating performance.Cost-Effective: Hadoop can run on commodity hardware, making it a cost-effective solution for big data processing.Open Source: Hadoop is freely available and has a large, active community providing support and development.Integration with Other Tools: Hadoop integrates seamlessly with other big data tools like Spark, Hive, and Pig, expanding its capabilities.Flexibility: Hadoop supports various data formats and can be customized to meet specific use cases.What to do after Installing Hadoop on Ubuntu?Configure and start the Hadoop cluster: Set up Hadoop services like the NameNode, DataNode, ResourceManager, and NodeManager.Load data into HDFS: Upload your data files to the Hadoop Distributed File System (HDFS) for storage and processing.Run MapReduce jobs: Use MapReduce to perform data processing tasks, such as word counting, filtering, and aggregation.Use other Hadoop components: Explore tools like Hive, Pig, and Spark for more advanced data analysis and machine learning tasks.Monitor and manage the cluster: Use the Hadoop web interface to monitor resource usage, job execution, and troubleshoot issues.Integrate with other systems: Connect Hadoop. RDBMS vs Hadoop; Hadoop Architecture; Hadoop 2.x vs Hadoop 3.x; Hadoop – Ecosystem; Installation and Environment Setup. How to Install Hadoop in Linux? Installing and Setting Up Hadoop in Windows 10; Installing Single Node Cluster Hadoop on Windows; Configuring Eclipse with Apache Hadoop; Components of Hadoop. Hadoop Distributed File In Hadoop for Windows Succinctly, Author Dave Vickers provides a thorough guide to using Hadoop directly on Windows operating systems. Book Description. Topics included: Installing Hadoop for Windows Enterprise Hadoop for Windows Programming Enterprise Hadoop in Windows Hadoop Integration and Business Intelligence (BI) Tools in Windows

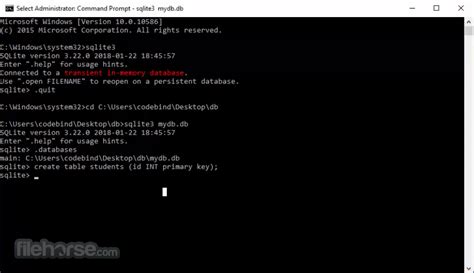

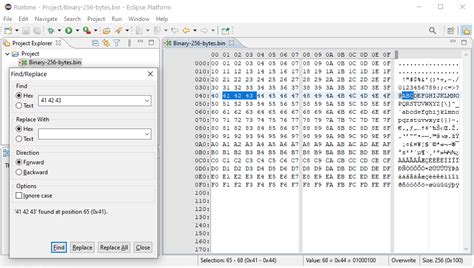

Installation of Hadoop on Windows

Into manageable smaller pieces, then saved on clusters of community servers. This offers scalability and economy.Furthermore, Hadoop employs MapReduce to run parallel processings, which both stores and retrieves data faster than information residing on a traditional database. Traditional databases are great for handling predictable and constant workflows; otherwise, you need Hadoop’s power of scalable infrastructure.5 Advantages of Hadoop for Big DataHadoop was created to deal with big data, so it’s hardly surprising that it offers so many benefits. The five main benefits are:Speed. Hadoop’s concurrent processing, MapReduce model, and HDFS lets users run complex queries in just a few seconds.Diversity. Hadoop’s HDFS can store different data formats, like structured, semi-structured, and unstructured.Cost-Effective. Hadoop is an open-source data framework.Resilient. Data stored in a node is replicated in other cluster nodes, ensuring fault tolerance.Scalable. Since Hadoop functions in a distributed environment, you can easily add more servers.How Is Hadoop Being Used?Hadoop is being used in different sectors to date. The following sectors have the usage of Hadoop.1. Financial Sectors:Hadoop is used to detect fraud in the financial sector. Hadoop is also used to analyse fraud patterns. Credit card companies also use Hadoop to find out the exact customers for their products. 2. Healthcare Sectors:Hadoop is used to analyse huge data such as medical devices, clinical data, medical reports etc. Hadoop analyses and scans the reports thoroughly to reduce the manual work.3. Hadoop Applications in the Retail Industry:Retailers use Hadoop to improve their sales. Hadoop also helped in tracking the products bought by the customers. Hadoop also helps retailers to predict the price range of the products. Hadoop also helps retailers to make their products online. These advantages of Hadoop help the retail industry a lot.4. Security and Law Enforcement:The National Security Agency of the USA uses Hadoop to prevent terrorist attacks. Data tools are used by the cops to chase criminals and predict their plans. Hadoop is also used in defence, cybersecurity etc.5. Hadoop Uses in Advertisements:Hadoop is also used in the advertisement sector too. Hadoop is used for capturing video, analysing transactions and handling social media platforms. The data analysed is generated through social media platforms like Facebook, Instagram etc. Hadoop is also used in the promotion of the products.There are many more advantages of Hadoop in daily life as well as in the Software sector too.Hadoop Use CaseIn this case study, we will discuss how Hadoop can combat fraudulent activities. Let us look at the case of Zions Bancorporation. Their main challenge was in how to use the Zions security team’s approaches to combat fraudulent activities taking place. The problem was that they used an RDBMS dataset, which was unable to store and analyze huge amounts of data.In other words,Hadoop on Windows - UNHEALTHY Data Nodes Fix - Hadoop

(13+) or newerMinimum system requirementsIntel processors - Core i3 (dual core) or newerApple Silicon processors (using Rosetta - 2024.1 and below)Apple Silicon processors (version 2024.2 or newer on MacOS Ventura or newer)4 GB memory or larger2 GB HDD free or larger Recommended requirements Intel Core i7 (quad core)16 GB memory or larger2 GB SSD free or largerVirtual environmentsCitrix environments, Microsoft Hyper-V, Parallels and VMware.All of Tableau’s products operate in virtualised environments when they are configured with the proper underlying Windows operating system and minimum hardware requirements. CPUs must support SSE4.2 and POPCNT instruction sets so any Processor Compatibility mode must be disabled. InternationalisationTableau Prep is Unicode-enabled and compatible with data stored in any language.The user interface and supporting documentation are in English (US), English (UK), French (France), French (Canada), German, Italian, Spanish, Brazilian Portuguese, Swedish, Japanese, Korean, Traditional Chinese, Simplified Chinese and Thai.Prep ConductorFor Prep Conductor technical specifications, see Tableau Server. Tableau Prep data sources Connect to the data you care about with Tableau Prep.Amazon AthenaAmazon AuroraAmazon EMR Hadoop Hive**Amazon RedshiftAlibaba AnalyticsDBAlibaba Data Lake AnalyticsAlibaba MaxComputeApache Drill**BoxClickHouseCloudera HadoopCloudera ImpalaDatabricksDenodoDropboxExasolGoogle Cloud SQLGoogle DriveHortonworks Hadoop Hive**HP VerticaIBM BigInsightsIBM DB2IBM PDA (Netezza)JDBCKognitioKyvos**MapR Hadoop HiveMariaDBMarkLogic 7.0 and 8.0SingleStore (MemSQL)Microsoft AccessMicrosoft ExcelMicrosoft Azure Data Lake Gen 2Microsoft Azure SynapseMicrosoft SQL ServerMonet DBMongo DBMySQLODBCOneDriveOracleOracle NetsuitePDFPivotal Greenplum Database**PostgreSQLPrestoQubole PrestoSalesforceSalesforce Data CloudSalesforce DatoramaSalesforce Marketing CloudSAP HANASAP Sybase IQ*SAP Sybase ASE*SnowflakeSparkSQL**SplunkStatistical filesTableau extractsTableau published data sourcesTeradataText fileSeamlessly connect Tableau to additional data sources with Connectors on the Tableau Exchange*Available for Windows only** Not available in Tableau for Apple Silicon Tableau Server requirements Tableau ServerWeb browsers Chrome on Windows, Mac and Android Microsoft Edge on Windows Mozilla Firefox & Firefox ESR on Windows and Mac Apple Safari on Mac and iOS Tableau Mobile iOS and Android Apps, available from the Apple App Store and Google Play Store respectivelyMinimum requirements for. RDBMS vs Hadoop; Hadoop Architecture; Hadoop 2.x vs Hadoop 3.x; Hadoop – Ecosystem; Installation and Environment Setup. How to Install Hadoop in Linux? Installing and Setting Up Hadoop in Windows 10; Installing Single Node Cluster Hadoop on Windows; Configuring Eclipse with Apache Hadoop; Components of Hadoop. Hadoop Distributed FileHadoop, Hadoop Config, HDFS, Hadoop MapReduce

Install or Upgrade the Workload Automation System Agent You can install or upgrade the Workload Automation System Agent by using either an interactive program or a command-based silent installer. The installer provides an option to upgrade the agent. See step 7 for links to upgrade instructions. During the installation of the System Agent, the installer will now install all the binaries associated with the system agent and all the plug-ins including Hadoop AI. Each plug-in will have to be enabled and configured using PluginInstaller and the respective cfg file.All the plugin cfg files are added to the addons directory in the agent install directory upon System Agent install.During the upgrade of the System Agent, the installer will upgrade all the binaries associated with the system agent and all the plug-ins including Hadoop AI. The existing plug-ins, including Hadoop AI, which are enabled prior to the upgrade will continue to be enabled and functional after the upgrade of the system agent. Additional plug-ins can be enabled and configured using PluginInstaller and the respective cfg file.If you are installing multiple agents, the silent installer lets you automate the installation process. After you install the agent, you can configure it to change your settings or to implement more features. You also set up security features after the agent is installed.On Windows, when you install the agent in AutoSys Workload Automation Agent Configuration mode, the installer adds entries to the Windows registry. If the registry entry for Agent Name> already exists, it means an agent installation with the same Agent Name> exists, and the installation fails with an error message.Install or upgrade the agent using one of these methods: The version of InstallAnywhere shipped with the agent does not support Windows Server 2012 R2. To use the installer on that platform, run in WindowsComments

August 30, 2016 3 minute read My latest Pluralsight course is out now: Hadoop for .NET DevelopersIt takes you through running Hadoop on Windows and using .NET to write MapReduce queries - proving that you can do Big Data on the Microsoft stack.The course has five modules, starting with the architecture of Hadoop and working through a proof-of-concept approach, evaluating different options for running Hadoop and integrating it with .NET.1. Introducing HadoopHadoop is the core technology in Big Data problems - it provides scalable, reliable storage for huge quantities of data, and scalable, reliable compute for querying that data. To start the course I cover HDFS and YARN - how they work and how they work together. I use a 600MB public dataset (from the 2011 UK census), upload it to HDFS and demonstrate a simple Java MapReduce query. Unlike my other Pluralsight course, Real World Big Data in Azure, there are word counts in this course - to focus on the technology, I keep the queries simple for this one.2. Running Hadoop on WindowsHadoop is a Java technology, so you can run it on any system with a compatible JVM. You don’t need to run Hadoop from the JAR files though, there are packaged options which make it easy to run Hadoop on Windows. I cover four options: Hadoop in Docker - using my Hadoop with .NET Core Docker image to run a Dockerized Hadoop cluster; Hortonworks Data Platform, a packaged Hadoop distribution which is available for Linux and Windows; Syncfusion’s Big Data Platform, a new Windows-only Hadoop distribution which has a friendly UI; Azure HDInsight, Microsoft’s managed Hadoop platform in the cloud. If you’re starting out with Hadoop, the Big Data Platform is a great place to start - it’s a simple two-click install, and it comes with lots of sample code.3. Working with Hadoop in .NETJava is the native programming language for MapReduce queries, but Hadoop provides integration for any language with the Hadoop Streaming API. I walk through building a MapReduce program with the full .NET Framework, then using .NET Core, and compare those options with Microsoft’s Hadoop SDK for .NET (spoiler: the SDK is a nice framework, but hasn’t seen much activity for a while). Using .NET Core for MapReduce jobs gives you the option to write queries in C# and run them on Linux or Windows clusters, as I blogged about in Hadoop and .NET Core - A Match Made in Docker.4. Querying Data with MapReduceBasic MapReduce jobs are easy with .NET and .NET Core, but in this module we look at more advanced functionality and see how to write performant, reliable .NET MapReduce jobs. In this module I extend the .NET queries to

2025-04-09Use: combiners; multiple reducers; the distributed cache; counters and logging. You can run Hadoop on Windows and use .NET for queries, and still make use of high-level Hadoop functionality to tune your queries.5. Navigating the Hadoop EcosystemHadoop is a foundational technology, and querying with MapReduce gives you a lot of power - but it’s a technical approach which needs custom-built components. A whole ecosystem has emerged to take advantage of the core Hadoop foundations of storage and compute, but make accessing the data faster and easier. In the final module, I look at some of the major technologies in the ecosystem and see how they work with Hadoop, and with each other, and with .NET: Hive - querying data in Hadoop with a SQL-like language; HBase - a real-time Big Data NoSQL database which uses Hadoop for storage; Spark - a compute engine which uses Hadoop, but caches data in-memory to provide fast data access. If the larger ecosystem interests you, I go into more depth with a couple of free eBooks: Hive Succinctly and HBase Succinctly, and I also cover them in detail on Azure in my Pluralsight course HDInsight Deep Dive: Storm, HBase and Hive.The goal of Hadoop for .NET Developers is to give you a thorough grounding in Hadoop, so you can run your own PoC using the approach in the course, to evaluate Hadoop with .NET for your own datasets.

2025-03-30Group: Apache HadoopApache Hadoop CommonLast Release on Oct 18, 2024Apache Hadoop Client aggregation pom with dependencies exposedLast Release on Oct 18, 2024Apache Hadoop HDFSLast Release on Oct 18, 2024Apache Hadoop MapReduce CoreLast Release on Oct 18, 2024Hadoop CoreLast Release on Jul 24, 2013Apache Hadoop AnnotationsLast Release on Oct 18, 2024Apache Hadoop Auth - Java HTTP SPNEGOLast Release on Oct 18, 2024Apache Hadoop Mini-ClusterLast Release on Oct 18, 2024Apache Hadoop YARN APILast Release on Oct 18, 2024Apache Hadoop MapReduce JobClientLast Release on Oct 18, 2024Apache Hadoop YARN CommonLast Release on Oct 18, 2024Apache Hadoop MapReduce CommonLast Release on Oct 18, 2024Apache Hadoop YARN ClientLast Release on Oct 18, 2024This module contains code to support integration with Amazon Web Services.It also declares the dependencies needed to work with AWS services.Last Release on Oct 18, 2024Apache Hadoop HDFS ClientLast Release on Oct 18, 2024Apache Hadoop MapReduce AppLast Release on Oct 18, 2024Apache Hadoop YARN Server TestsLast Release on Oct 18, 2024Apache Hadoop MapReduce ShuffleLast Release on Oct 18, 2024Hadoop TestLast Release on Jul 24, 2013Apache Hadoop YARN Server CommonLast Release on Oct 18, 2024Prev12345678910Next

2025-04-04Hadoop is a distributed computing framework for processing and storing massive datasets. It runs on Ubuntu and offers scalable data storage and parallel processing capabilities.Installing Hadoop enables you to efficiently handle big data challenges and extract valuable insights from your data.To Install Hadoop on Ubuntu, the below steps are required:Install Java.Create a User.Download Hadoop.Configure Environment.Configure Hadoop.Start Hadoop.Access Web Interface.Prerequisites to Install Hadoop on UbuntuComplete Steps to Install Hadoop on UbuntuStep 1: Install Java Development Kit (JDK)Step 2: Create a dedicated user for Hadoop & Configure SSHStep 3: Download the latest stable releaseStep 4: Configure Hadoop Environment VariablesStep 5: Configure Hadoop Environment VariablesStep 6: Start the Hadoop ClusterStep 7: Open the web interfaceWhat is Hadoop and Why Install it on Linux Ubuntu?What are the best Features and Advantages of Hadoop on Ubuntu?What to do after Installing Hadoop on Ubuntu?How to Monitor the Performance of the Hadoop Cluster?Why Hadoop Services are Not starting on Ubuntu?How to Troubleshoot issues with HDFS?Why My MapReduce jobs are failing?ConclusionPrerequisites to Install Hadoop on UbuntuBefore installing Hadoop on Ubuntu, make sure your system is meeting below specifications:A Linux VPS running Ubuntu.A non-root user with sudo privileges.Access to Terminal/Command line.Complete Steps to Install Hadoop on UbuntuOnce you provided the above required options for Hadoop installation Ubuntu including buying Linux VPS, you are ready to follow the steps of this guide.In the end, you will be able to leverage its capabilities to efficiently manage and analyze large datasets.Step 1: Install Java Development Kit (JDK)Since Hadoop requires Java to run, use the following command to install the default JDK and JRE:sudo apt install default-jdk default-jre -yThen, run the command below to Verify the installation by checking the Java version:java -versionOutput:java version "11.0.16" 2021-08-09 LTSOpenJDK 64-Bit Server VM (build 11.0.16+8-Ubuntu-0ubuntu0.22.04.1)As you see, if Java is installed, you’ll see the version information.Step 2: Create a dedicated user for Hadoop & Configure SSHTo create a new user, run the command below and create the Hadoop user:sudo adduser hadoopTo add the user to the sudo group, type:sudo usermod -aG sudo hadoopRun the command below to switch to the Hadoop user:sudo su - hadoopTo install OpenSSH server and client, run:sudo apt install openssh-server openssh-client -yThen, generate SSH keys by running the following command:ssh-keygen -t rsaNotes:Press Enter to save the key to the default location.You can optionally set a passphrase for added security.Now, you can add the public key to authorized_keys:cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keysTo set permissions

2025-04-05Running the following command:hdfs namenode -formatThis initializes the Hadoop Distributed File System (HDFS).Step 6: Start the Hadoop ClusterRun the command below to start the NameNode and DataNode:start-dfs.shTo start the ResourceManager and NodeManager, run:start-yarn.shCheck running processes by running the command below:jpsYou should see processes like NameNode, DataNode, ResourceManager, and NodeManager running.If all is correct, you are ready to access the Hadoop Web Interface.Step 7: Open the web interfaceWhile you know your IP, navigate to in your web browser: should see the Hadoop web interface.To access the DataNodes, use the URL to view the below screen:Also, you can use the URL to access the YARN Resource Manager as you see below:The Resource Manager is an indispensable tool for monitoring all the running processes within your Hadoop cluster.What is Hadoop and Why Install it on Linux Ubuntu?Hadoop is a distributed computing framework designed to process and store massive amounts of data efficiently.It runs on various operating systems, including Ubuntu, and offers scalable data storage and parallel processing capabilities.Installing Hadoop on Ubuntu empowers you to handle big data challenges, extract valuable insights, and perform complex data analysis tasks that would be impractical on a single machine.What are the best Features and Advantages of Hadoop on Ubuntu?Scalability: Easily scale Hadoop clusters to handle growing data volumes by adding more nodes.Fault Tolerance: Data is replicated across multiple nodes, ensuring data durability and availability.Parallel Processing: Hadoop distributes data processing tasks across multiple nodes, accelerating performance.Cost-Effective: Hadoop can run on commodity hardware, making it a cost-effective solution for big data processing.Open Source: Hadoop is freely available and has a large, active community providing support and development.Integration with Other Tools: Hadoop integrates seamlessly with other big data tools like Spark, Hive, and Pig, expanding its capabilities.Flexibility: Hadoop supports various data formats and can be customized to meet specific use cases.What to do after Installing Hadoop on Ubuntu?Configure and start the Hadoop cluster: Set up Hadoop services like the NameNode, DataNode, ResourceManager, and NodeManager.Load data into HDFS: Upload your data files to the Hadoop Distributed File System (HDFS) for storage and processing.Run MapReduce jobs: Use MapReduce to perform data processing tasks, such as word counting, filtering, and aggregation.Use other Hadoop components: Explore tools like Hive, Pig, and Spark for more advanced data analysis and machine learning tasks.Monitor and manage the cluster: Use the Hadoop web interface to monitor resource usage, job execution, and troubleshoot issues.Integrate with other systems: Connect Hadoop

2025-04-19